FreeRTOS系统配置

FreeRTOS的系统配置文件为FreeRTOSConfig.h,在此配置文件中可以完成FreeRTOS的裁剪和配置。FreeRTOS中的裁剪和配置使用条件编译的方式来实现。

“INCLUDE_”开始的宏,用来表示使能或者失能FreeRTOS中的相应API函数,比如INCLUDE_vTaskPrioritySet用来决定是否可以使用vTaskPrioritySet函数

“config”开始的宏也用来对FreeRTOS的进行裁剪和配置,比如

configAPPLICATION_ALLOCATED_HEAP宏,如果不开启,那么FreeRTOS的堆内存由编译器分配,开启后,堆内存将由用户自行设置

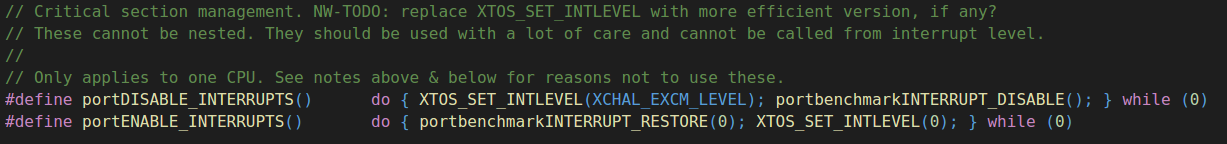

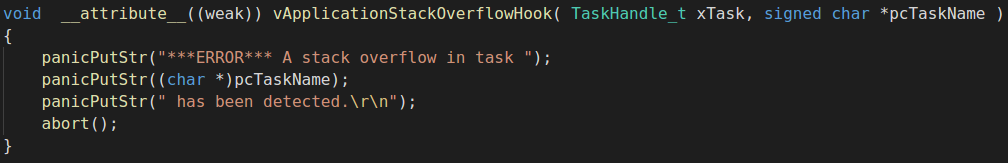

configCHECK_FOR_STACK_OVERFLOW宏如果不为零,用户必须提供一个钩子函数vApplicationStackOverflowHook

PEB4SS.png

当堆栈溢出太严重时可能会损毁该函数的两个参数,这时可以通过查看变量pxCurrentTCB来确定哪个任务发生了堆栈溢出

vApplicationStackOverflowHook( ( TaskHandle_t ) pxCurrentTCB[ xPortGetCoreID() ], pxCurrentTCB[ xPortGetCoreID() ]->pcTaskName );堆栈溢出有两种检测方法:

方法1:上下文切换的时候需要保存现场,现场是保存在堆栈中的,这个时候任务堆栈使用率很可能达到最大值,方法1就是不断检测任务堆栈指针是否指向有效空间,如果指向了无效空间,则调用钩子函数。该方法的特点是快,缺点是不能检测所有的堆栈溢出

方法2:在创建任务的时候向任务堆栈填充一个已知的标记值,然后检测堆栈后面的几个字节是否被改写,如果被改写,则调用钩子函数,方法2几乎能够检测到所有的堆栈溢出

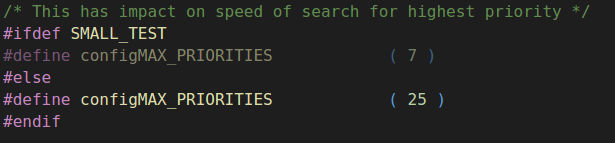

configMAX_PRIORITIES设置任务的优先级数量,设置好后任务就可以使用从0~configMAX_PRIORITIES-1的优先级,其中0是最低优先级

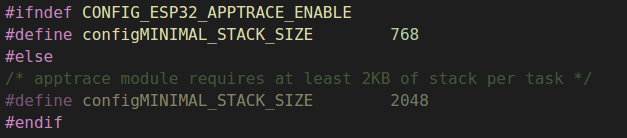

configMINIMAL_STACK_SIZE设置空闲任务的最小任务堆栈大小,以字 为单位

configTOTAL_HEAP_SIZE设置堆的大小,如果使用了动态内存管理,则FreeRTOS在创建任务、信号量、队列等的时候就会从用户指定的内存中获取空间

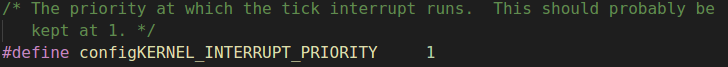

configKERNEL_INTERRUPT_PRIORITY设置了内核中断系统中systick中断的优先级(FreeRTOS中systick的中断优先级是最低的)

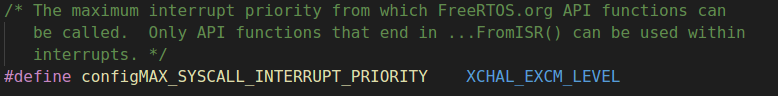

configMAX_SYSCALL_INTERRUPT_PRIORITY设置了FreeRTOS系统可管理的最大优先级,这里实际值为3 ,高于此优先级的中断是不会被FreeRTOS内核屏蔽的,对实时性要求严格的任务就可以使用这些优先级,中断服务函数也不能调用FreeRTOS的API函数;低于(包括本身)此优先级的中断可以安全地调用以FromISR结尾的API函数

FreeRTOS中的Task

任务控制块

xTaskCreate()创建任务的时候,会自动给每个任务分配一个任务控制块

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 typedef struct tskTaskControlBlock { volatile StackType_t *pxTopOfStack; #if ( portUSING_MPU_WRAPPERS == 1 ) xMPU_SETTINGS xMPUSettings; #endif ListItem_t xGenericListItem; ListItem_t xEventListItem; UBaseType_t uxPriority; StackType_t *pxStack; char pcTaskName[ configMAX_TASK_NAME_LEN ]; BaseType_t xCoreID; #if ( portSTACK_GROWTH > 0 || configENABLE_TASK_SNAPSHOT == 1 ) StackType_t *pxEndOfStack; #endif #if ( portCRITICAL_NESTING_IN_TCB == 1 ) UBaseType_t uxCriticalNesting; uint32_t uxOldInterruptState; #endif #if ( configUSE_TRACE_FACILITY == 1 ) UBaseType_t uxTCBNumber; UBaseType_t uxTaskNumber; #endif #if ( configUSE_MUTEXES == 1 ) UBaseType_t uxBasePriority; UBaseType_t uxMutexesHeld; #endif #if ( configUSE_APPLICATION_TASK_TAG == 1 ) TaskHookFunction_t pxTaskTag; #endif #if ( configNUM_THREAD_LOCAL_STORAGE_POINTERS > 0 ) void *pvThreadLocalStoragePointers[ configNUM_THREAD_LOCAL_STORAGE_POINTERS ]; #if ( configTHREAD_LOCAL_STORAGE_DELETE_CALLBACKS ) TlsDeleteCallbackFunction_t pvThreadLocalStoragePointersDelCallback[ configNUM_THREAD_LOCAL_STORAGE_POINTERS ]; #endif #endif #if ( configGENERATE_RUN_TIME_STATS == 1 ) uint32_t ulRunTimeCounter; #endif #if ( configUSE_NEWLIB_REENTRANT == 1 ) struct _reent xNewLib_reent ; #endif #if ( configUSE_TASK_NOTIFICATIONS == 1 ) volatile uint32_t ulNotifiedValue; volatile eNotifyValue eNotifyState; #endif #if ( tskSTATIC_AND_DYNAMIC_ALLOCATION_POSSIBLE != 0 ) uint8_t ucStaticallyAllocated; #endif } tskTCB; typedef tskTCB TCB_t;

任务堆栈

任务调度器在进行任务切换的时候,会将当前任务的现场(CPU寄存器值等等)保存在此任务的任务堆栈中;此任务下次运行的时候就会先用堆栈中保存的值来恢复现场,之后任务就会接着从上次中断的地方开始运行。使用动态的方法创建任务时,任务堆栈会自动创建;使用静态的方法创建任务时,任务堆栈需要用户自行定义。任务堆栈的数据类型为StackType_t,其大小为4字节,所以动态创建的任务,其堆栈大小是传入数值4倍

1 2 #define portSTACK_TYPE uint32_t typedef portSTACK_TYPE StackType_t

尾调用 调度任务创建和删除API函数

函数

描述

xTaskCreate()

使用动态的方法创建一个任务

xTaskCreateStatic()

使用静态的方法创建一个任务

xTaskCreateRestricted()

创建一个使用MPU进行限制的任务,相关内存使用动态内存分配

vTaskDelete()

删除一个任务

任务挂起和恢复API函数

函数

描述

vTaskSuspend()

挂起一个任务,传入某个任务的句柄,NULL表示当前任务

vTaskResume()

恢复一个任务的运行

xTaskResumeFromISR()

中断服务函数中恢复一个任务的运行。返回pdTRUE表示恢复运行的任务的优先级等于或者高于正在运行的任务(被中断打断的任务),这意味着在退出中断服务函数的时候必须进行一次上下文切换(调用portYIELD_FROM_ISR )。返回pdFALSE表示恢复运行的任务的优先级低于当前正在运行的任务(被中断打断的任务),这意味着在退出中断服务函数的以后不需要进行上下文切换

其他常用API函数(部分函数需要在配置文件中开启相关的宏)

函数

描述

xTaskGetHandle()

根据任务名字获取某个任务的任务句柄

vTaskStartScheduler()

开启任务调度

vTaskSuspendAll()

挂起任务调度器,支持嵌套

vTaskResumeAll()

恢复调度器

vTaskDelay()

任务延时,单位是时钟节拍

uxTaskPriorityGet()

获取指定任务的优先级

vTaskPrioritySet()

改变一个任务的任务优先级

uxTaskGetSystemState()

获取系统中所有任务的任务状态

vTaskGetInfo()

获取单个任务的状态

xTaskGetCurrentTaskHandle()

获取当前任务的任务句柄

xTaskGetHandle()

根据任务名字获取任务的任务句柄

xTaskGetIdleTaskHandle()

返回空闲任务的任务句柄

uxTaskGetStackHighWaterMark()

检查任务从创建好到现在的历史剩余最小值,FreeRTOS把这个历史剩余最小值叫“高水位线”

eTaskGetState()

查询某个任务的运行状态

pcTaskGetName()

根据某个任务的任务句柄来查询这个任务对应的任务名

xTaskGetTickCount()/xTaskGetTickCountFromISR()

查询任务调度器从启动到现在的时间计数器xTickCount的值,每个滴答定时器中断时xTickCount就会加1

xTaskGetSchedulerState()

获取FreeRTOS的任务调度器运行情况:运行、关闭还是挂起

uxTaskGetNumberOfTasks()

查询系统当前存在的任务数量

vTaskList()

创建一个表格来描述每个任务的详细信息

vTaskGetRunTimeStats()

统计任务的运行时间信息,任务的运行时间信息提供了每个任务获取到CPU使用权总的时间

SetThreadLocalStoragePointer()

设置线程本地存储指针的值,每个任务都有自己的指针数组来作为线程本地存储,使用这些线程本地存储可以用来在任务控制块中存储一些应用信息,这些信息只属于线程自己

GetThreadLocalStoragePointer()

获取线程本地存储指针的值

FreeRTOS开关中断

PEzUKK.png

关闭中断是指优先级低于XCHAL_EXCM_LEVEL的中断将会被屏蔽

FreeRTOS临界段代码保护

函数/宏

描述

taskENTER_CRITICAL()

任务级进入临界段

taskEXIT_CRITICAL()

任务级退出临界段

taskENTER_CRITICAL_FROME_ISR()

中断级进入临界段(中断优先级不能高于configMAX_SYSCALL_INTERRUPT_PRIORITY)

taskEXIT_CRITICAL_FROM_ISR()

中断级退出临界段

FreeRTOS列表(双向循环链表)和列表项 1 2 3 4 5 6 7 8 typedef struct xLIST { listFIRST_LIST_INTEGRITY_CHECK_VALUE configLIST_VOLATILE UBaseType_t uxNumberOfItems; ListItem_t * configLIST_VOLATILE pxIndex; MiniListItem_t xListEnd; listSECOND_LIST_INTEGRITY_CHECK_VALUE } List_t;

1 2 3 4 5 6 7 8 9 10 11 struct xLIST_ITEM { listFIRST_LIST_ITEM_INTEGRITY_CHECK_VALUE configLIST_VOLATILE TickType_t xItemValue; struct xLIST_ITEM * configLIST_VOLATILE pxNext ; struct xLIST_ITEM * configLIST_VOLATILE pxPrevious ; void * pvOwner; void * configLIST_VOLATILE pvContainer; listSECOND_LIST_ITEM_INTEGRITY_CHECK_VALUE }; typedef struct xLIST_ITEM ListItem_t ;

1 2 3 4 5 6 7 8 struct xMINI_LIST_ITEM { listFIRST_LIST_ITEM_INTEGRITY_CHECK_VALUE configLIST_VOLATILE TickType_t xItemValue; struct xLIST_ITEM * configLIST_VOLATILE pxNext ; struct xLIST_ITEM * configLIST_VOLATILE pxPrevious ; }; typedef struct xMINI_LIST_ITEM MiniListItem_t ;

列表操作相关API

函数名

描述

void vListInitialise( List_t * const pxList )

列表初始化

void vListInitialiseItem( ListItem_t * const pxItem )

列表项初始化

void vListInsert( List_t const pxList, ListItem_t const pxNewListItem )

列表项插入(指定位置)

void vListInsertEnd( List_t const pxList, ListItem_t const pxNewListItem )

列表项插入(末尾)

UBaseType_t uxListRemove( ListItem_t * const pxItemToRemove )

列表项删除

listGET_OWNER_OF_NEXT_ENTRY(pxTCB,pxList)

列表的遍历

vListInsert中,列表项的插入位置是根据列表项中的xItemValue来决定,按照升序 方式排列,例如xItemValue的值为portMAX_DELAY就表示要插入的位置是列表的最末尾

vListInsertEnd中,列表项插入的位置是pxList中pxIndex指向的列表项的前面

uxListRemove函数会返回删除后列表的剩余长度,该函数只是将指定的列表项从列表中删除掉,并不会将这个列表项的内存释放掉

列表中的成员变量pxIndex是用来便利列表的,每调用一次宏listGET_OWNER_OF_NEXT_ENTRY,列表的pxIndex就会指向下一个列表项,并且返回这个列表项的pxOwner变量值

FreeRTOS的调度器 vTaskStartScheduler函数 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 void vTaskStartScheduler ( void ) BaseType_t xReturn; BaseType_t i; for ( i=0 ; i<portNUM_PROCESSORS; i++) { #if ( INCLUDE_xTaskGetIdleTaskHandle == 1 ) { xReturn = xTaskCreatePinnedToCore( prvIdleTask, "IDLE" , tskIDLE_STACK_SIZE, ( void * ) NULL , ( tskIDLE_PRIORITY | portPRIVILEGE_BIT ), &xIdleTaskHandle[i], i ); } #else { xReturn = xTaskCreatePinnedToCore( prvIdleTask, "IDLE" , tskIDLE_STACK_SIZE, ( void * ) NULL , ( tskIDLE_PRIORITY | portPRIVILEGE_BIT ), NULL , i); } #endif } #if ( configUSE_TIMERS == 1 ) { if ( xReturn == pdPASS ) { xReturn = xTimerCreateTimerTask(); } else { mtCOVERAGE_TEST_MARKER(); } } #endif if ( xReturn == pdPASS ) { portDISABLE_INTERRUPTS(); xTickCount = ( TickType_t ) 0U ; portCONFIGURE_TIMER_FOR_RUN_TIME_STATS(); xSchedulerRunning = pdTRUE; if ( xPortStartScheduler() != pdFALSE ) { } else { } } else { configASSERT( xReturn ); } }

xPortStartScheduler函数 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 BaseType_t xPortStartScheduler ( void ) #if XCHAL_CP_NUM > 0 _xt_coproc_init(); #endif _xt_tick_divisor_init(); _frxt_tick_timer_init(); port_xSchedulerRunning[xPortGetCoreID()] = 1 ; __asm__ volatile ("call0 _frxt_dispatch\n" ) ; return pdTRUE; }

xTaskCreatePinnedToCore函数 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 BaseType_t xTaskCreatePinnedToCore ( TaskFunction_t pxTaskCode, const char * const pcName, const uint32_t usStackDepth, void * const pvParameters, UBaseType_t uxPriority, TaskHandle_t * const pxCreatedTask, const BaseType_t xCoreID ) TCB_t *pxNewTCB; BaseType_t xReturn; #if ( portSTACK_GROWTH > 0 ) { pxNewTCB = ( TCB_t * ) pvPortMallocTcbMem( sizeof ( TCB_t ) ); if ( pxNewTCB != NULL ) { pxNewTCB->pxStack = ( StackType_t * ) pvPortMallocStackMem( ( ( ( size_t ) usStackDepth ) * sizeof ( StackType_t ) ) ); if ( pxNewTCB->pxStack == NULL ) { vPortFree( pxNewTCB ); pxNewTCB = NULL ; } } } #else { StackType_t *pxStack; pxStack = ( StackType_t * ) pvPortMallocStackMem( ( ( ( size_t ) usStackDepth ) * sizeof ( StackType_t ) ) ); if ( pxStack != NULL ) { pxNewTCB = ( TCB_t * ) pvPortMallocTcbMem( sizeof ( TCB_t ) ); if ( pxNewTCB != NULL ) { pxNewTCB->pxStack = pxStack; } else { vPortFree( pxStack ); } } else { pxNewTCB = NULL ; } } #endif if ( pxNewTCB != NULL ) { #if ( tskSTATIC_AND_DYNAMIC_ALLOCATION_POSSIBLE != 0 ) { pxNewTCB->ucStaticallyAllocated = tskDYNAMICALLY_ALLOCATED_STACK_AND_TCB; } #endif prvInitialiseNewTask( pxTaskCode, pcName, usStackDepth, pvParameters, uxPriority, pxCreatedTask, pxNewTCB, NULL , xCoreID ); prvAddNewTaskToReadyList( pxNewTCB, pxTaskCode, xCoreID ); xReturn = pdPASS; } else { xReturn = errCOULD_NOT_ALLOCATE_REQUIRED_MEMORY; } return xReturn; }

prvInitialiseNewTask函数 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 static void prvInitialiseNewTask ( TaskFunction_t pxTaskCode, const char * const pcName, const uint32_t ulStackDepth, void * const pvParameters, UBaseType_t uxPriority, TaskHandle_t * const pxCreatedTask, TCB_t *pxNewTCB, const MemoryRegion_t * const xRegions, const BaseType_t xCoreID ) StackType_t *pxTopOfStack; UBaseType_t x; #if ( portUSING_MPU_WRAPPERS == 1 ) BaseType_t xRunPrivileged; if ( ( uxPriority & portPRIVILEGE_BIT ) != 0U ) { xRunPrivileged = pdTRUE; } else { xRunPrivileged = pdFALSE; } uxPriority &= ~portPRIVILEGE_BIT; #endif #if ( ( configCHECK_FOR_STACK_OVERFLOW > 1 ) || ( configUSE_TRACE_FACILITY == 1 ) || ( INCLUDE_uxTaskGetStackHighWaterMark == 1 ) ) { ( void ) memset ( pxNewTCB->pxStack, ( int ) tskSTACK_FILL_BYTE, ( size_t ) ulStackDepth * sizeof ( StackType_t ) ); } #endif #if ( portSTACK_GROWTH < 0 ) { pxTopOfStack = pxNewTCB->pxStack + ( ulStackDepth - ( uint32_t ) 1 ); pxTopOfStack = ( StackType_t * ) ( ( ( portPOINTER_SIZE_TYPE ) pxTopOfStack ) & ( ~( ( portPOINTER_SIZE_TYPE ) portBYTE_ALIGNMENT_MASK ) ) ); configASSERT( ( ( ( portPOINTER_SIZE_TYPE ) pxTopOfStack & ( portPOINTER_SIZE_TYPE ) portBYTE_ALIGNMENT_MASK ) == 0U L ) ); #if ( configENABLE_TASK_SNAPSHOT == 1 ) { pxNewTCB->pxEndOfStack = pxTopOfStack; } #endif } #else { pxTopOfStack = pxNewTCB->pxStack; configASSERT( ( ( ( portPOINTER_SIZE_TYPE ) pxNewTCB->pxStack & ( portPOINTER_SIZE_TYPE ) portBYTE_ALIGNMENT_MASK ) == 0U L ) ); pxNewTCB->pxEndOfStack = pxNewTCB->pxStack + ( ulStackDepth - ( uint32_t ) 1 ); } #endif for ( x = ( UBaseType_t ) 0 ; x < ( UBaseType_t ) configMAX_TASK_NAME_LEN; x++ ) { pxNewTCB->pcTaskName[ x ] = pcName[ x ]; if ( pcName[ x ] == 0x00 ) { break ; } else { mtCOVERAGE_TEST_MARKER(); } } pxNewTCB->pcTaskName[ configMAX_TASK_NAME_LEN - 1 ] = '\0' ; if ( uxPriority >= ( UBaseType_t ) configMAX_PRIORITIES ) { uxPriority = ( UBaseType_t ) configMAX_PRIORITIES - ( UBaseType_t ) 1U ; } else { mtCOVERAGE_TEST_MARKER(); } pxNewTCB->uxPriority = uxPriority; pxNewTCB->xCoreID = xCoreID; #if ( configUSE_MUTEXES == 1 ) { pxNewTCB->uxBasePriority = uxPriority; pxNewTCB->uxMutexesHeld = 0 ; } #endif vListInitialiseItem( &( pxNewTCB->xGenericListItem ) ); vListInitialiseItem( &( pxNewTCB->xEventListItem ) ); listSET_LIST_ITEM_OWNER( &( pxNewTCB->xGenericListItem ), pxNewTCB ); listSET_LIST_ITEM_VALUE( &( pxNewTCB->xEventListItem ), ( TickType_t ) configMAX_PRIORITIES - ( TickType_t ) uxPriority ); listSET_LIST_ITEM_OWNER( &( pxNewTCB->xEventListItem ), pxNewTCB ); #if ( portCRITICAL_NESTING_IN_TCB == 1 ) { pxNewTCB->uxCriticalNesting = ( UBaseType_t ) 0U ; } #endif #if ( configUSE_APPLICATION_TASK_TAG == 1 ) { pxNewTCB->pxTaskTag = NULL ; } #endif #if ( configGENERATE_RUN_TIME_STATS == 1 ) { pxNewTCB->ulRunTimeCounter = 0U L; } #endif #if ( portUSING_MPU_WRAPPERS == 1 ) { vPortStoreTaskMPUSettings( &( pxNewTCB->xMPUSettings ), xRegions, pxNewTCB->pxStack, ulStackDepth ); } #else { ( void ) xRegions; } #endif #if ( configNUM_THREAD_LOCAL_STORAGE_POINTERS != 0 ) { for ( x = 0 ; x < ( UBaseType_t ) configNUM_THREAD_LOCAL_STORAGE_POINTERS; x++ ) { pxNewTCB->pvThreadLocalStoragePointers[ x ] = NULL ; #if ( configTHREAD_LOCAL_STORAGE_DELETE_CALLBACKS == 1) pxNewTCB->pvThreadLocalStoragePointersDelCallback[ x ] = NULL ; #endif } } #endif #if ( configUSE_TASK_NOTIFICATIONS == 1 ) { pxNewTCB->ulNotifiedValue = 0 ; pxNewTCB->eNotifyState = eNotWaitingNotification; } #endif #if ( configUSE_NEWLIB_REENTRANT == 1 ) { esp_reent_init(&pxNewTCB->xNewLib_reent); } #endif #if ( INCLUDE_xTaskAbortDelay == 1 ) { pxNewTCB->ucDelayAborted = pdFALSE; } #endif #if ( portUSING_MPU_WRAPPERS == 1 ) { pxNewTCB->pxTopOfStack = pxPortInitialiseStack( pxTopOfStack, pxTaskCode, pvParameters, xRunPrivileged ); } #else { pxNewTCB->pxTopOfStack = pxPortInitialiseStack( pxTopOfStack, pxTaskCode, pvParameters ); } #endif if ( ( void * ) pxCreatedTask != NULL ) { *pxCreatedTask = ( TaskHandle_t ) pxNewTCB; } else { mtCOVERAGE_TEST_MARKER(); } }

pxPortInitialiseStack函数 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 StackType_t *pxPortInitialiseStack ( StackType_t *pxTopOfStack, TaskFunction_t pxCode, void *pvParameters ) StackType_t *sp, *tp; XtExcFrame *frame; #if XCHAL_CP_NUM > 0 uint32_t *p; #endif uint32_t *threadptr; void *task_thread_local_start; extern int _thread_local_start, _thread_local_end, _rodata_start; uint32_t thread_local_sz = (uint8_t *)&_thread_local_end - (uint8_t *)&_thread_local_start; thread_local_sz = ALIGNUP(0x10 , thread_local_sz); sp = (StackType_t *) (((UBaseType_t)(pxTopOfStack + 1 ) - XT_CP_SIZE - thread_local_sz - XT_STK_FRMSZ) & ~0xf ); for (tp = sp; tp <= pxTopOfStack; ++tp) *tp = 0 ; frame = (XtExcFrame *) sp; frame->pc = (UBaseType_t) pxCode; frame->a0 = 0 ; frame->a1 = (UBaseType_t) sp + XT_STK_FRMSZ; frame->exit = (UBaseType_t) _xt_user_exit; #ifdef __XTENSA_CALL0_ABI__ frame->a2 = (UBaseType_t) pvParameters; frame->ps = PS_UM | PS_EXCM; #else frame->a6 = (UBaseType_t) pvParameters; frame->ps = PS_UM | PS_EXCM | PS_WOE | PS_CALLINC(1 ); #endif #ifdef XT_USE_SWPRI frame->vpri = 0xFFFFFFFF ; #endif task_thread_local_start = (void *)(((uint32_t )pxTopOfStack - XT_CP_SIZE - thread_local_sz) & ~0xf ); memcpy (task_thread_local_start, &_thread_local_start, thread_local_sz); threadptr = (uint32_t *)(sp + XT_STK_EXTRA); *threadptr = (uint32_t )task_thread_local_start - ((uint32_t )&_thread_local_start - (uint32_t )&_rodata_start) - 0x10 ; #if XCHAL_CP_NUM > 0 p = (uint32_t *)(((uint32_t ) pxTopOfStack - XT_CP_SIZE) & ~0xf ); p[0 ] = 0 ; p[1 ] = 0 ; p[2 ] = (((uint32_t ) p) + 12 + XCHAL_TOTAL_SA_ALIGN - 1 ) & -XCHAL_TOTAL_SA_ALIGN; #endif return sp; }

prvAddNewTaskToReadyList函数

FreeRTOS使用不同的列表来表示任务的不同状态

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 static void prvAddNewTaskToReadyList ( TCB_t *pxNewTCB, TaskFunction_t pxTaskCode, BaseType_t xCoreID ) TCB_t *curTCB, *tcb0, *tcb1; configASSERT( xCoreID == tskNO_AFFINITY || xCoreID < portNUM_PROCESSORS); taskENTER_CRITICAL(&xTaskQueueMutex); { uxCurrentNumberOfTasks++; if ( xCoreID == tskNO_AFFINITY ) { if ( portNUM_PROCESSORS == 1 ) { xCoreID = 0 ; } else { tcb0 = pxCurrentTCB[0 ]; tcb1 = pxCurrentTCB[1 ]; if ( tcb0 == NULL ) { xCoreID = 0 ; } else if ( tcb1 == NULL ) { xCoreID = 1 ; } else if ( tcb0->uxPriority < pxNewTCB->uxPriority && tcb0->uxPriority < tcb1->uxPriority ) { xCoreID = 0 ; } else if ( tcb1->uxPriority < pxNewTCB->uxPriority ) { xCoreID = 1 ; } else { xCoreID = xPortGetCoreID(); } } } if ( pxCurrentTCB[ xCoreID ] == NULL ) { pxCurrentTCB[ xCoreID ] = pxNewTCB; if ( uxCurrentNumberOfTasks == ( UBaseType_t ) 1 ) { #if portFIRST_TASK_HOOK if ( xPortGetCoreID() == 0 ) { vPortFirstTaskHook(pxTaskCode); } #endif prvInitialiseTaskLists(); } else { mtCOVERAGE_TEST_MARKER(); } } else { if ( xSchedulerRunning == pdFALSE ) { if ( pxCurrentTCB[xCoreID] == NULL || pxCurrentTCB[xCoreID]->uxPriority <= pxNewTCB->uxPriority ) { pxCurrentTCB[xCoreID] = pxNewTCB; } } else { mtCOVERAGE_TEST_MARKER(); } } uxTaskNumber++; #if ( configUSE_TRACE_FACILITY == 1 ) { pxNewTCB->uxTCBNumber = uxTaskNumber; } #endif traceTASK_CREATE( pxNewTCB ); prvAddTaskToReadyList( pxNewTCB ); portSETUP_TCB( pxNewTCB ); } taskEXIT_CRITICAL(&xTaskQueueMutex); if ( xSchedulerRunning != pdFALSE ) { taskENTER_CRITICAL(&xTaskQueueMutex); curTCB = pxCurrentTCB[ xCoreID ]; if ( curTCB == NULL || curTCB->uxPriority < pxNewTCB->uxPriority ) { if ( xCoreID == xPortGetCoreID() ) { taskYIELD_IF_USING_PREEMPTION(); } else { taskYIELD_OTHER_CORE(xCoreID, pxNewTCB->uxPriority); } } else { mtCOVERAGE_TEST_MARKER(); } taskEXIT_CRITICAL(&xTaskQueueMutex); } else { mtCOVERAGE_TEST_MARKER(); } }

prvAddTaskToReadyList宏 1 2 3 4 #define prvAddTaskToReadyList( pxTCB ) \ traceMOVED_TASK_TO_READY_STATE( pxTCB ); \ taskRECORD_READY_PRIORITY( ( pxTCB )->uxPriority ); \ vListInsertEnd( &( pxReadyTasksLists[ ( pxTCB )->uxPriority ] ), &( ( pxTCB )->xGenericListItem ) )

vTaskDelete函数 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 void vTaskDelete ( TaskHandle_t xTaskToDelete ) TCB_t *pxTCB; int core = xPortGetCoreID(); UBaseType_t free_now; taskENTER_CRITICAL(&xTaskQueueMutex); { pxTCB = prvGetTCBFromHandle( xTaskToDelete ); if ( uxListRemove( &( pxTCB->xGenericListItem ) ) == ( UBaseType_t ) 0 ) { taskRESET_READY_PRIORITY( pxTCB->uxPriority ); } else { mtCOVERAGE_TEST_MARKER(); } if ( listLIST_ITEM_CONTAINER( &( pxTCB->xEventListItem ) ) != NULL ) { ( void ) uxListRemove( &( pxTCB->xEventListItem ) ); } else { mtCOVERAGE_TEST_MARKER(); } uxTaskNumber++; if ( pxTCB == pxCurrentTCB[ core ] || (portNUM_PROCESSORS > 1 && pxTCB == pxCurrentTCB[ !core ]) || (portNUM_PROCESSORS > 1 && pxTCB->xCoreID == (!core)) ) { vListInsertEnd( &xTasksWaitingTermination, &( pxTCB->xGenericListItem ) ); ++uxTasksDeleted; portPRE_TASK_DELETE_HOOK( pxTCB, &xYieldPending[xPortGetCoreID()] ); free_now = pdFALSE; } else { --uxCurrentNumberOfTasks; prvResetNextTaskUnblockTime(); free_now = pdTRUE; } traceTASK_DELETE( pxTCB ); } taskEXIT_CRITICAL(&xTaskQueueMutex); if (free_now == pdTRUE){ #if ( configNUM_THREAD_LOCAL_STORAGE_POINTERS > 0 ) && ( configTHREAD_LOCAL_STORAGE_DELETE_CALLBACKS ) prvDeleteTLS( pxTCB ); #endif prvDeleteTCB( pxTCB ); } if ( xSchedulerRunning != pdFALSE ) { if ( pxTCB == pxCurrentTCB[ core ] ) { configASSERT( uxSchedulerSuspended[ core ] == 0 ); portPRE_TASK_DELETE_HOOK( pxTCB, &xYieldPending[xPortGetCoreID()] ); portYIELD_WITHIN_API(); } else if ( portNUM_PROCESSORS > 1 && pxTCB == pxCurrentTCB[ !core] ) { vPortYieldOtherCore( !core ); } else { mtCOVERAGE_TEST_MARKER(); } } }

vTaskSuspend函数 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 void vTaskSuspend ( TaskHandle_t xTaskToSuspend ) TCB_t *pxTCB; TCB_t *curTCB; taskENTER_CRITICAL(&xTaskQueueMutex); { pxTCB = prvGetTCBFromHandle( xTaskToSuspend ); traceTASK_SUSPEND( pxTCB ); if ( uxListRemove( &( pxTCB->xGenericListItem ) ) == ( UBaseType_t ) 0 ) { taskRESET_READY_PRIORITY( pxTCB->uxPriority ); } else { mtCOVERAGE_TEST_MARKER(); } if ( listLIST_ITEM_CONTAINER( &( pxTCB->xEventListItem ) ) != NULL ) { ( void ) uxListRemove( &( pxTCB->xEventListItem ) ); } else { mtCOVERAGE_TEST_MARKER(); } traceMOVED_TASK_TO_SUSPENDED_LIST(pxTCB); vListInsertEnd( &xSuspendedTaskList, &( pxTCB->xGenericListItem ) ); curTCB = pxCurrentTCB[ xPortGetCoreID() ]; } taskEXIT_CRITICAL(&xTaskQueueMutex); if ( pxTCB == curTCB ) { if ( xSchedulerRunning != pdFALSE ) { configASSERT( uxSchedulerSuspended[ xPortGetCoreID() ] == 0 ); portYIELD_WITHIN_API(); } else { if ( listCURRENT_LIST_LENGTH( &xSuspendedTaskList ) == uxCurrentNumberOfTasks ) { taskENTER_CRITICAL(&xTaskQueueMutex); pxCurrentTCB[ xPortGetCoreID() ] = NULL ; taskEXIT_CRITICAL(&xTaskQueueMutex); } else { vTaskSwitchContext(); } } } else { if ( xSchedulerRunning != pdFALSE ) { taskENTER_CRITICAL(&xTaskQueueMutex); { prvResetNextTaskUnblockTime(); } taskEXIT_CRITICAL(&xTaskQueueMutex); } else { mtCOVERAGE_TEST_MARKER(); } } }

vTaskResume函数 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 void vTaskResume ( TaskHandle_t xTaskToResume ) TCB_t * const pxTCB = ( TCB_t * ) xTaskToResume; configASSERT( xTaskToResume ); taskENTER_CRITICAL(&xTaskQueueMutex); if ( ( pxTCB != NULL ) && ( pxTCB != pxCurrentTCB[ xPortGetCoreID() ] ) ) { { if ( prvTaskIsTaskSuspended( pxTCB ) == pdTRUE ) { traceTASK_RESUME( pxTCB ); ( void ) uxListRemove( &( pxTCB->xGenericListItem ) ); prvAddTaskToReadyList( pxTCB ); if ( tskCAN_RUN_HERE(pxTCB->xCoreID) && pxTCB->uxPriority >= pxCurrentTCB[ xPortGetCoreID() ]->uxPriority ) { taskYIELD_IF_USING_PREEMPTION(); } else if ( pxTCB->xCoreID != xPortGetCoreID() ) { taskYIELD_OTHER_CORE( pxTCB->xCoreID, pxTCB->uxPriority ); } else { mtCOVERAGE_TEST_MARKER(); } } else { mtCOVERAGE_TEST_MARKER(); } } } else { mtCOVERAGE_TEST_MARKER(); } taskEXIT_CRITICAL(&xTaskQueueMutex); }

FreeRTOS任务切换

执行一个会引起任务切换的API函数,比如taskYIELD

系统滴答定时器中断

任务切换一般是在PendSV(可挂起的系统调用)中断服务函数里面完成的

执行系统调用 1 2 #define taskYIELD() portYIELD() #define portYIELD() vPortYield()

vPortYield函数 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 .globl vPortYield .type vPortYield,@function .align 4 vPortYield: #ifdef __XTENSA_CALL0_ABI__ addi sp, sp, -XT_SOL_FRMSZ #else entry sp, XT_SOL_FRMSZ #endif rsr a2, PS s32i a0, sp, XT_SOL_PC s32i a2, sp, XT_SOL_PS #ifdef __XTENSA_CALL0_ABI__ s32i a12, sp, XT_SOL_A12 s32i a13, sp, XT_SOL_A13 s32i a14, sp, XT_SOL_A14 s32i a15, sp, XT_SOL_A15 #else movi a6, ~(PS_WOE_MASK|PS_INTLEVEL_MASK) and a2, a2, a6 addi a2, a2, XCHAL_EXCM_LEVEL wsr a2, PS rsync call0 xthal_window_spill_nw l32i a2, sp, XT_SOL_PS wsr a2, PS #endif rsil a2, XCHAL_EXCM_LEVEL #if XCHAL_CP_NUM > 0 call0 _xt_coproc_savecs #endif movi a2, pxCurrentTCB getcoreid a3 addx4 a2, a3, a2 l32i a2, a2, 0 movi a3, 0 s32i a3, sp, XT_SOL_EXIT s32i sp, a2, TOPOFSTACK_OFFS #if XCHAL_CP_NUM > 0 l32i a2, a2, CP_TOPOFSTACK_OFFS movi a3, 0 wsr a3, CPENABLE beqz a2, 1f s16i a3, a2, XT_CPENABLE 1 : #endif call0 _frxt_dispatch

_frxt_dispatch函数 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 .globl _frxt_dispatch .type _frxt_dispatch,@function .align 4 _frxt_dispatch: #ifdef __XTENSA_CALL0_ABI__ call0 vTaskSwitchContext movi a2, pxCurrentTCB getcoreid a3 addx4 a2, a3, a2 #else call4 vTaskSwitchContext movi a2, pxCurrentTCB getcoreid a3 addx4 a2, a3, a2 #endif l32i a3, a2, 0 l32i sp, a3, TOPOFSTACK_OFFS s32i a3, a2, 0 l32i a2, sp, XT_STK_EXIT bnez a2, .L_frxt_dispatch_stk .L_frxt_dispatch_sol: l32i a3, sp, XT_SOL_PS #ifdef __XTENSA_CALL0_ABI__ l32i a12, sp, XT_SOL_A12 l32i a13, sp, XT_SOL_A13 l32i a14, sp, XT_SOL_A14 l32i a15, sp, XT_SOL_A15 #endif l32i a0, sp, XT_SOL_PC #if XCHAL_CP_NUM > 0 rsync #endif wsr a3, PS #ifdef __XTENSA_CALL0_ABI__ addi sp, sp, XT_SOL_FRMSZ ret #else retw #endif .L_frxt_dispatch_stk: #if XCHAL_CP_NUM > 0 movi a3, pxCurrentTCB getcoreid a2 addx4 a3, a2, a3 l32i a3, a3, 0 l32i a2, a3, CP_TOPOFSTACK_OFFS l16ui a3, a2, XT_CPENABLE wsr a3, CPENABLE #endif call0 _xt_context_restore #ifdef __XTENSA_CALL0_ABI__ l32i a14, sp, XT_STK_A14 l32i a15, sp, XT_STK_A15 #endif #if XCHAL_CP_NUM > 0 rsync #endif l32i a0, sp, XT_STK_EXIT ret

vTaskSwitchContext函数 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 void vTaskSwitchContext ( void ) int irqstate=portENTER_CRITICAL_NESTED(); tskTCB * pxTCB; if ( uxSchedulerSuspended[ xPortGetCoreID() ] != ( UBaseType_t ) pdFALSE ) { xYieldPending[ xPortGetCoreID() ] = pdTRUE; } else { xYieldPending[ xPortGetCoreID() ] = pdFALSE; xSwitchingContext[ xPortGetCoreID() ] = pdTRUE; traceTASK_SWITCHED_OUT(); #if ( configGENERATE_RUN_TIME_STATS == 1 ) { #ifdef portALT_GET_RUN_TIME_COUNTER_VALUE portALT_GET_RUN_TIME_COUNTER_VALUE( ulTotalRunTime ); #else ulTotalRunTime = portGET_RUN_TIME_COUNTER_VALUE(); #endif taskENTER_CRITICAL_ISR(&xTaskQueueMutex); if ( ulTotalRunTime > ulTaskSwitchedInTime[ xPortGetCoreID() ] ) { pxCurrentTCB[ xPortGetCoreID() ]->ulRunTimeCounter += ( ulTotalRunTime - ulTaskSwitchedInTime[ xPortGetCoreID() ] ); } else { mtCOVERAGE_TEST_MARKER(); } taskEXIT_CRITICAL_ISR(&xTaskQueueMutex); ulTaskSwitchedInTime[ xPortGetCoreID() ] = ulTotalRunTime; } #endif taskFIRST_CHECK_FOR_STACK_OVERFLOW(); taskSECOND_CHECK_FOR_STACK_OVERFLOW(); #ifdef CONFIG_FREERTOS_PORTMUX_DEBUG vPortCPUAcquireMutex( &xTaskQueueMutex, __FUNCTION__, __LINE__ ); #else vPortCPUAcquireMutex( &xTaskQueueMutex ); #endif unsigned portBASE_TYPE foundNonExecutingWaiter = pdFALSE, ableToSchedule = pdFALSE, resetListHead; portBASE_TYPE uxDynamicTopReady = uxTopReadyPriority; unsigned portBASE_TYPE holdTop=pdFALSE; while ( ableToSchedule == pdFALSE && uxDynamicTopReady >= 0 ) { resetListHead = pdFALSE; if (!listLIST_IS_EMPTY( &( pxReadyTasksLists[ uxDynamicTopReady ] ) )) { ableToSchedule = pdFALSE; tskTCB * pxRefTCB; pxRefTCB = pxReadyTasksLists[ uxDynamicTopReady ].pxIndex->pvOwner; if ((void *)pxReadyTasksLists[ uxDynamicTopReady ].pxIndex==(void *)&pxReadyTasksLists[ uxDynamicTopReady ].xListEnd) { listGET_OWNER_OF_NEXT_ENTRY( pxRefTCB, &( pxReadyTasksLists[ uxDynamicTopReady ] ) ); } do { listGET_OWNER_OF_NEXT_ENTRY( pxTCB, &( pxReadyTasksLists[ uxDynamicTopReady ] ) ); foundNonExecutingWaiter = pdTRUE; portBASE_TYPE i = 0 ; for ( i=0 ; i<portNUM_PROCESSORS; i++ ) { if (i == xPortGetCoreID()) { continue ; } else if (pxCurrentTCB[i] == pxTCB) { holdTop=pdTRUE; foundNonExecutingWaiter = pdFALSE; break ; } } if (foundNonExecutingWaiter == pdTRUE) { if (pxTCB->xCoreID == tskNO_AFFINITY) { pxCurrentTCB[xPortGetCoreID()] = pxTCB; ableToSchedule = pdTRUE; } else if (pxTCB->xCoreID == xPortGetCoreID()) { pxCurrentTCB[xPortGetCoreID()] = pxTCB; ableToSchedule = pdTRUE; } else { ableToSchedule = pdFALSE; holdTop=pdTRUE; } } else { ableToSchedule = pdFALSE; } if (ableToSchedule == pdFALSE) { resetListHead = pdTRUE; } else if ((ableToSchedule == pdTRUE) && (resetListHead == pdTRUE)) { tskTCB * pxResetTCB; do { listGET_OWNER_OF_NEXT_ENTRY( pxResetTCB, &( pxReadyTasksLists[ uxDynamicTopReady ] ) ); } while (pxResetTCB != pxRefTCB); } } while ((ableToSchedule == pdFALSE) && (pxTCB != pxRefTCB)); } else { if (!holdTop) --uxTopReadyPriority; } --uxDynamicTopReady; } traceTASK_SWITCHED_IN(); xSwitchingContext[ xPortGetCoreID() ] = pdFALSE; #ifdef CONFIG_FREERTOS_PORTMUX_DEBUG vPortCPUReleaseMutex( &xTaskQueueMutex, __FUNCTION__, __LINE__ ); #else vPortCPUReleaseMutex( &xTaskQueueMutex ); #endif #if CONFIG_FREERTOS_WATCHPOINT_END_OF_STACK vPortSetStackWatchpoint(pxCurrentTCB[xPortGetCoreID()]->pxStack); #endif } portEXIT_CRITICAL_NESTED(irqstate); }

系统滴答定时器中断 _frxt_timer_int 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 .globl _frxt_timer_int .type _frxt_timer_int,@function .align 4 _frxt_timer_int: ENTRY(16 ) #ifdef CONFIG_PM_TRACE movi a6, 1 getcoreid a7 call4 esp_pm_trace_enter #endif .L_xt_timer_int_catchup: #ifdef XT_CLOCK_FREQ movi a2, XT_TICK_DIVISOR #else movi a3, _xt_tick_divisor l32i a2, a3, 0 #endif rsr a3, XT_CCOMPARE add a4, a3, a2 wsr a4, XT_CCOMPARE esync #ifdef __XTENSA_CALL0_ABI__ s32i a2, sp, 4 s32i a3, sp, 8 #endif #ifdef __XTENSA_CALL0_ABI__ call0 xPortSysTickHandler #else call4 xPortSysTickHandler #endif #ifdef __XTENSA_CALL0_ABI__ l32i a2, sp, 4 l32i a3, sp, 8 #endif esync rsr a4, CCOUNT sub a4, a4, a3 blt a2, a4, .L_xt_timer_int_catchup #ifdef CONFIG_PM_TRACE movi a6, 1 getcoreid a7 call4 esp_pm_trace_exit #endif RET(16 )

xPortSysTickHandler函数 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 BaseType_t xPortSysTickHandler ( void ) BaseType_t ret; portbenchmarkIntLatency(); traceISR_ENTER(SYSTICK_INTR_ID); ret = xTaskIncrementTick(); if ( ret != pdFALSE ) { portYIELD_FROM_ISR(); } else { traceISR_EXIT(); } return ret; }

1 #define portYIELD_FROM_ISR() {traceISR_EXIT_TO_SCHEDULER(); _frxt_setup_switch();}

_frxt_setup_switch函数 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 .global _frxt_setup_switch .type _frxt_setup_switch,@function .align 4 _frxt_setup_switch: ENTRY(16 ) getcoreid a3 movi a2, port_switch_flag addx4 a2, a3, a2 movi a3, 1 s32i a3, a2, 0 RET(16 )

vPortYieldFromInt 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 .globl vPortYieldFromInt .type vPortYieldFromInt,@function .align 4 vPortYieldFromInt: ENTRY(16 ) #if XCHAL_CP_NUM > 0 movi a3, pxCurrentTCB getcoreid a2 addx4 a3, a2, a3 l32i a3, a3, 0 l32i a2, a3, CP_TOPOFSTACK_OFFS rsr a3, CPENABLE s16i a3, a2, XT_CPENABLE movi a3, 0 wsr a3, CPENABLE #endif #ifdef __XTENSA_CALL0_ABI__ call0 _frxt_dispatch #else RET(16 ) #endif

:end:总结上下文切换的场合 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 graph LR fd(_frxt_dispatch) --> tsc(vTaskSwitchContext) ty(taskYIELD/portYIELD) --> py(vPortYield) py --> fd fie(XT_RTOS_INT_ENTER/_frxt_int_enter) --> fti(_frxt_timer_int) fti --> psth(xPortSysTickHandler) psth --> pyfi(portYIELD_FROM_ISR) pyfi --> fss(_frxt_setup_switch) fss --> fiex(XT_RTOS_INT_EXIT/_frxt_int_exit) fiex --> pyf(vPortYieldFromInt) pyf --> fd tyoc(taskYIELD_OTHER_CORE) --> vpyoc(vPortYieldOtherCore) vpyoc --> ecisy(esp_crosscore_int_send_yield) ecisy --> ecis(esp_crosscore_int_send) ecis --> eci(esp_crosscore_isr) eci --> ecihy(esp_crosscore_isr_handle_yield) ecihy --> pyfi

FreeRTOS时间片调度

时间片调度发生在滴答定时器的中断服务函数中,在中断服务函数中会调用xPortSysTickHandler(),而xPortSysTickHandler会引发任务调度,只是这个任务调度是有条件的,只有xTaskIncrementTick 的返回值不为pdFALSE时,才会进行任务调度。如果当前任务所对应的优先级下有其他的任务存在,那么函数xTaskIncrementTick就会返回pdTRUE。

FreeRTOS时间管理 vTaskDelay相对延时函数 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 void vTaskDelay ( const TickType_t xTicksToDelay ) TickType_t xTimeToWake; BaseType_t xAlreadyYielded = pdFALSE; if ( xTicksToDelay > ( TickType_t ) 0U ) { configASSERT( uxSchedulerSuspended[ xPortGetCoreID() ] == 0 ); taskENTER_CRITICAL(&xTaskQueueMutex); { traceTASK_DELAY(); xTimeToWake = xTickCount + xTicksToDelay; if ( uxListRemove( &( pxCurrentTCB[ xPortGetCoreID() ]->xGenericListItem ) ) == ( UBaseType_t ) 0 ) { portRESET_READY_PRIORITY( pxCurrentTCB[ xPortGetCoreID() ]->uxPriority, uxTopReadyPriority ); } else { mtCOVERAGE_TEST_MARKER(); } prvAddCurrentTaskToDelayedList( xPortGetCoreID(), xTimeToWake ); } taskEXIT_CRITICAL(&xTaskQueueMutex); } else { mtCOVERAGE_TEST_MARKER(); } if ( xAlreadyYielded == pdFALSE ) { portYIELD_WITHIN_API(); } else { mtCOVERAGE_TEST_MARKER(); } }

prvAddCurrentTaskToDelayedList函数 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 static void prvAddCurrentTaskToDelayedList ( const BaseType_t xCoreID, const TickType_t xTimeToWake ) listSET_LIST_ITEM_VALUE( &( pxCurrentTCB[ xCoreID ]->xGenericListItem ), xTimeToWake ); if ( xTimeToWake < xTickCount ) { traceMOVED_TASK_TO_OVERFLOW_DELAYED_LIST(); vListInsert( pxOverflowDelayedTaskList, &( pxCurrentTCB[ xCoreID ]->xGenericListItem ) ); } else { traceMOVED_TASK_TO_DELAYED_LIST(); vListInsert( pxDelayedTaskList, &( pxCurrentTCB[ xCoreID ]->xGenericListItem ) ); if ( xTimeToWake < xNextTaskUnblockTime ) { xNextTaskUnblockTime = xTimeToWake; } else { mtCOVERAGE_TEST_MARKER(); } } }

vTaskDelayUntil绝对延时函数

使用该函数延时的任务也不一定能够周期性的运行,该函数只能保证按照一定的周期取消阻塞,进入就绪态

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 void vTaskDelayUntil ( TickType_t * const pxPreviousWakeTime, const TickType_t xTimeIncrement ) TickType_t xTimeToWake; BaseType_t xAlreadyYielded=pdFALSE, xShouldDelay = pdFALSE; configASSERT( pxPreviousWakeTime ); configASSERT( ( xTimeIncrement > 0U ) ); configASSERT( uxSchedulerSuspended[ xPortGetCoreID() ] == 0 ); taskENTER_CRITICAL(&xTaskQueueMutex); { const TickType_t xConstTickCount = xTickCount; xTimeToWake = *pxPreviousWakeTime + xTimeIncrement; if ( xConstTickCount < *pxPreviousWakeTime ) { if ( ( xTimeToWake < *pxPreviousWakeTime ) && ( xTimeToWake > xConstTickCount ) ) { xShouldDelay = pdTRUE; } else { mtCOVERAGE_TEST_MARKER(); } } else { if ( ( xTimeToWake < *pxPreviousWakeTime ) || ( xTimeToWake > xConstTickCount ) ) { xShouldDelay = pdTRUE; } else { mtCOVERAGE_TEST_MARKER(); } } *pxPreviousWakeTime = xTimeToWake; if ( xShouldDelay != pdFALSE ) { traceTASK_DELAY_UNTIL(); if ( uxListRemove( &( pxCurrentTCB[ xPortGetCoreID() ]->xGenericListItem ) ) == ( UBaseType_t ) 0 ) { portRESET_READY_PRIORITY( pxCurrentTCB[ xPortGetCoreID() ]->uxPriority, uxTopReadyPriority ); } else { mtCOVERAGE_TEST_MARKER(); } prvAddCurrentTaskToDelayedList( xPortGetCoreID(), xTimeToWake ); } else { mtCOVERAGE_TEST_MARKER(); } } taskEXIT_CRITICAL(&xTaskQueueMutex); if ( xAlreadyYielded == pdFALSE ) { portYIELD_WITHIN_API(); } else { mtCOVERAGE_TEST_MARKER(); } }

portYIELD_WITHIN_API宏定义 1 2 #define portYIELD_WITHIN_API() esp_crosscore_int_send_yield(xPortGetCoreID())

xTaskIncreamentTick函数的主要功能

xTickCount是FreeRTOS的系统节拍计数器,每个滴答定时器中断后xTickCount就会增加一,xTickCount的具体操作是在函数xTaskIncrementTick中进行的

系统节拍计数器的值加1

判断是否有任务的延时等待时间已到,如果就就将其恢复

处理时间片调度

综上给出是否需要执行上下文切换的判断结果并返回滴答定时器中断

FreeRTOS队列 队列结构体Queue_t 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 typedef struct QueueDefinition { int8_t *pcHead; int8_t *pcTail; int8_t *pcWriteTo; union { int8_t *pcReadFrom; UBaseType_t uxRecursiveCallCount; } u; List_t xTasksWaitingToSend; List_t xTasksWaitingToReceive; volatile UBaseType_t uxMessagesWaiting; UBaseType_t uxLength; UBaseType_t uxItemSize; #if ( ( configSUPPORT_STATIC_ALLOCATION == 1 ) && ( configSUPPORT_DYNAMIC_ALLOCATION == 1 ) ) uint8_t ucStaticallyAllocated; #endif #if ( configUSE_QUEUE_SETS == 1 ) struct QueueDefinition *pxQueueSetContainer ; #endif #if ( configUSE_TRACE_FACILITY == 1 ) UBaseType_t uxQueueNumber; uint8_t ucQueueType; #endif portMUX_TYPE mux; } xQUEUE; typedef xQUEUE Queue_t;

创建队列xQueueCreate 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 #define xQueueCreate( uxQueueLength, uxItemSize ) xQueueGenericCreate( ( uxQueueLength ), ( uxItemSize ), ( queueQUEUE_TYPE_BASE ) ) QueueHandle_t xQueueGenericCreate ( const UBaseType_t uxQueueLength, const UBaseType_t uxItemSize, const uint8_t ucQueueType ) Queue_t *pxNewQueue; size_t xQueueSizeInBytes; uint8_t *pucQueueStorage; configASSERT( uxQueueLength > ( UBaseType_t ) 0 ); if ( uxItemSize == ( UBaseType_t ) 0 ) { xQueueSizeInBytes = ( size_t ) 0 ; } else { xQueueSizeInBytes = ( size_t ) ( uxQueueLength * uxItemSize ); } pxNewQueue = ( Queue_t * ) pvPortMalloc( sizeof ( Queue_t ) + xQueueSizeInBytes ); if ( pxNewQueue != NULL ) { pucQueueStorage = ( ( uint8_t * ) pxNewQueue ) + sizeof ( Queue_t ); #if ( configSUPPORT_STATIC_ALLOCATION == 1 ) { pxNewQueue->ucStaticallyAllocated = pdFALSE; } #endif prvInitialiseNewQueue( uxQueueLength, uxItemSize, pucQueueStorage, ucQueueType, pxNewQueue ); } return pxNewQueue; }

初始化队列prvInitialiseNewQueue 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 static void prvInitialiseNewQueue ( const UBaseType_t uxQueueLength, const UBaseType_t uxItemSize, uint8_t *pucQueueStorage, const uint8_t ucQueueType, Queue_t *pxNewQueue ) ( void ) ucQueueType; if ( uxItemSize == ( UBaseType_t ) 0 ) { pxNewQueue->pcHead = ( int8_t * ) pxNewQueue; } else { pxNewQueue->pcHead = ( int8_t * ) pucQueueStorage; } pxNewQueue->uxLength = uxQueueLength; pxNewQueue->uxItemSize = uxItemSize; ( void ) xQueueGenericReset( pxNewQueue, pdTRUE ); #if ( configUSE_TRACE_FACILITY == 1 ) { pxNewQueue->ucQueueType = ucQueueType; } #endif #if ( configUSE_QUEUE_SETS == 1 ) { pxNewQueue->pxQueueSetContainer = NULL ; } #endif traceQUEUE_CREATE( pxNewQueue ); }

队列复位函数xQueueGenericReset 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 BaseType_t xQueueGenericReset ( QueueHandle_t xQueue, BaseType_t xNewQueue ) Queue_t * const pxQueue = ( Queue_t * ) xQueue; configASSERT( pxQueue ); if ( xNewQueue == pdTRUE ) { vPortCPUInitializeMutex(&pxQueue->mux); } taskENTER_CRITICAL(&pxQueue->mux); { pxQueue->pcTail = pxQueue->pcHead + ( pxQueue->uxLength * pxQueue->uxItemSize ); pxQueue->uxMessagesWaiting = ( UBaseType_t ) 0U ; pxQueue->pcWriteTo = pxQueue->pcHead; pxQueue->u.pcReadFrom = pxQueue->pcHead + ( ( pxQueue->uxLength - ( UBaseType_t ) 1U ) * pxQueue->uxItemSize ); if ( xNewQueue == pdFALSE ) { if ( listLIST_IS_EMPTY( &( pxQueue->xTasksWaitingToSend ) ) == pdFALSE ) { if ( xTaskRemoveFromEventList( &( pxQueue->xTasksWaitingToSend ) ) == pdTRUE ) { queueYIELD_IF_USING_PREEMPTION(); } else { mtCOVERAGE_TEST_MARKER(); } } else { mtCOVERAGE_TEST_MARKER(); } } else { vListInitialise( &( pxQueue->xTasksWaitingToSend ) ); vListInitialise( &( pxQueue->xTasksWaitingToReceive ) ); } } taskEXIT_CRITICAL(&pxQueue->mux); return pdPASS; }

向队列发送消息

函数

描述

xQueueSend

发送消息到消息队列的尾部(后向入队)

xQueueSendToBack

发送消息到消息队列的尾部(后向入队)

xQueueSendToFront

发送消息到队列头(前向入队)

xQueueOverwrite

发送消息到消息队列,带覆写功能;队列满了以后自动覆盖掉旧的消息;通常用于向那些长度为1的队列发送消息

xQueueSendFromISR

发送消息到消息队列的尾部(后向入队),用于中断服务函数

xQueueSendToBackFromISR

发送消息到消息队列的尾部(后向入队),用于中断服务函数

xQueueSendToFrontFromISR

发送消息到队列头(前向入队),用于中断服务函数

xQueueOverwriteFromISR

发送消息到消息队列,带覆写功能;队列满了以后自动覆盖掉旧的消息,用于中断服务函数

xQueueGenericSend函数 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 BaseType_t xQueueGenericSend ( QueueHandle_t xQueue, const void * const pvItemToQueue, TickType_t xTicksToWait, const BaseType_t xCopyPosition ) BaseType_t xEntryTimeSet = pdFALSE, xYieldRequired; TimeOut_t xTimeOut; Queue_t * const pxQueue = ( Queue_t * ) xQueue; configASSERT( pxQueue ); configASSERT( !( ( pvItemToQueue == NULL ) && ( pxQueue->uxItemSize != ( UBaseType_t ) 0U ) ) ); configASSERT( !( ( xCopyPosition == queueOVERWRITE ) && ( pxQueue->uxLength != 1 ) ) ); #if ( ( INCLUDE_xTaskGetSchedulerState == 1 ) || ( configUSE_TIMERS == 1 ) ) { configASSERT( !( ( xTaskGetSchedulerState() == taskSCHEDULER_SUSPENDED ) && ( xTicksToWait != 0 ) ) ); } #endif for ( ;; ) { taskENTER_CRITICAL(&pxQueue->mux); { if ( ( pxQueue->uxMessagesWaiting < pxQueue->uxLength ) || ( xCopyPosition == queueOVERWRITE ) ) { traceQUEUE_SEND( pxQueue ); xYieldRequired = prvCopyDataToQueue( pxQueue, pvItemToQueue, xCopyPosition ); #if ( configUSE_QUEUE_SETS == 1 ) { if ( pxQueue->pxQueueSetContainer != NULL ) { if ( prvNotifyQueueSetContainer( pxQueue, xCopyPosition ) == pdTRUE ) { queueYIELD_IF_USING_PREEMPTION(); } else { mtCOVERAGE_TEST_MARKER(); } } else { if ( listLIST_IS_EMPTY( &( pxQueue->xTasksWaitingToReceive ) ) == pdFALSE ) { if ( xTaskRemoveFromEventList( &( pxQueue->xTasksWaitingToReceive ) ) == pdTRUE ) { queueYIELD_IF_USING_PREEMPTION(); } else { mtCOVERAGE_TEST_MARKER(); } } else if ( xYieldRequired != pdFALSE ) { queueYIELD_IF_USING_PREEMPTION(); } else { mtCOVERAGE_TEST_MARKER(); } } } #else { if ( listLIST_IS_EMPTY( &( pxQueue->xTasksWaitingToReceive ) ) == pdFALSE ) { if ( xTaskRemoveFromEventList( &( pxQueue->xTasksWaitingToReceive ) ) == pdTRUE ) { queueYIELD_IF_USING_PREEMPTION(); } else { mtCOVERAGE_TEST_MARKER(); } } else if ( xYieldRequired != pdFALSE ) { queueYIELD_IF_USING_PREEMPTION(); } else { mtCOVERAGE_TEST_MARKER(); } } #endif taskEXIT_CRITICAL(&pxQueue->mux); return pdPASS; } else { if ( xTicksToWait == ( TickType_t ) 0 ) { taskEXIT_CRITICAL(&pxQueue->mux); traceQUEUE_SEND_FAILED( pxQueue ); return errQUEUE_FULL; } else if ( xEntryTimeSet == pdFALSE ) { vTaskSetTimeOutState( &xTimeOut ); xEntryTimeSet = pdTRUE; } else { mtCOVERAGE_TEST_MARKER(); } } } taskEXIT_CRITICAL(&pxQueue->mux); taskENTER_CRITICAL(&pxQueue->mux); if ( xTaskCheckForTimeOut( &xTimeOut, &xTicksToWait ) == pdFALSE ) { if ( prvIsQueueFull( pxQueue ) != pdFALSE ) { traceBLOCKING_ON_QUEUE_SEND( pxQueue ); vTaskPlaceOnEventList( &( pxQueue->xTasksWaitingToSend ), xTicksToWait ); taskEXIT_CRITICAL(&pxQueue->mux); portYIELD_WITHIN_API(); } else { taskEXIT_CRITICAL(&pxQueue->mux); } } else { taskEXIT_CRITICAL(&pxQueue->mux); traceQUEUE_SEND_FAILED( pxQueue ); return errQUEUE_FULL; } } }

从队列读取消息

函数

描述

xQueueReceive

从队列中读取消息,然后从队列中将其删除

xQueuePeek

从队列中读取消息,不会将其从队列中删除

xQueueReceiveFromISR

从队列中读取消息,然后从队列中将其删除,用于中断服务函数中

xQueuePeekFromISR

从队列中读取消息,不会将其从队列中删除,用于中断服务函数中

FreeRTOS信号量 二值信号量和互斥信号量的差别

互斥信号量拥有优先级继承机制 ,而二值信号量没有优先级继承

二值信号量更适用于同步,而互斥信号量适用于简单的互斥访问

二值信号量

二值信号量其实就是只有一个队列项的队列,这个特殊的队列要么是满的,要么是空的,正好就是二值。任务和中断使用这个特殊队列的时候不用在乎队列中存在的是什么消息,只需要知道这个队列是满的还是空的即可,可以利用这个机制来完成任务和中断之间的同步。二值信号量使用的队列是没有存储区的,队列是否为空可以通过队列结构体的成员变量uxMessagesWaiting来判断。

函数

描述

xSemaphoreCreateBinary

动态创建二值信号量,新创建的二值信号量默认是空的

xSemaphoreCreateBinaryStatic

静态创建二值信号量

xSemaphoreGive

任务级信号量释放函数,可用于释放二值信号量、计数型信号量和互斥信号量

xSemaphoreGiveFromISR

中断级信号量释放函数,只能用于释放二值信号量和计数型信号量,不能释放互斥信号量(因为互斥信号量需要处理 优先级继承的问题,而中断不属于任务)

xSemaphoreTake

任务级获取信号量函数,可用于获取二值信号量、计数型信号量和互斥信号量

xSemaphoreTakeFromISR

中断级获取信号量函数,只能用于获取二值信号量和计数型信号量,不能获取互斥信号量(因为互斥信号量需要处理 优先级继承的问题,而中断不属于任务)

1 2 3 4 #define xSemaphoreCreateBinary() xQueueGenericCreate( ( UBaseType_t ) 1, semSEMAPHORE_QUEUE_ITEM_LENGTH, queueQUEUE_TYPE_BINARY_SEMAPHORE ) #define xSemaphoreGive( xSemaphore ) xQueueGenericSend( ( QueueHandle_t ) ( xSemaphore ), NULL, semGIVE_BLOCK_TIME, queueSEND_TO_BACK ) #define xSemaphoreTake( xSemaphore, xBlockTime ) xQueueGenericReceive( ( QueueHandle_t ) ( xSemaphore ), NULL, ( xBlockTime ), pdFALSE ) #define xSemaphoreTakeFromISR( xSemaphore, pxHigherPriorityTaskWoken ) xQueueReceiveFromISR( ( QueueHandle_t ) ( xSemaphore ), NULL, ( pxHigherPriorityTaskWoken ) )

计数型信号量

计数型信号量就是长度为大于1的队列,只是无需关心队列中存储了什么数据,计数型信号量主要应用场合是:

事件计数

每次事件发生的时候就在事件处理函数中释放信号量(增加信号量的计数值),其他任务会获取信号量来处理事件。在这种场合中,创建的计数型信号量初始计数值为0

资源管理

信号量值代表当前资源的可用数量,一个任务想要获得资源的使用权,首先必须获取信号量,成功以后信号量的值就会减1,当信号量值为0的时候就说明没有资源了。一个任务使用完资源以后一定要释放信号量,释放信号量以后信号量值会加1.在这种场合中,创建的计数型信号量初始值应该是资源的数量

函数

描述

xSemaphoreCreateCoumting

使用动态方法创建计数型信号量

xSemaphoreCreateCountingStatic

使用静态方法创建计数型信号量

xSemaphoreGetCount

获取计数型信号量的值

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 #define xSemaphoreCreateCounting( uxMaxCount, uxInitialCount ) xQueueCreateCountingSemaphore( ( uxMaxCount ), ( uxInitialCount ) ) #define xSemaphoreCreateCountingStatic( uxMaxCount, uxInitialCount, pxSemaphoreBuffer ) xQueueCreateCountingSemaphoreStatic( ( uxMaxCount ), ( uxInitialCount ), ( pxSemaphoreBuffer ) ) QueueHandle_t xQueueCreateCountingSemaphore ( const UBaseType_t uxMaxCount, const UBaseType_t uxInitialCount ) QueueHandle_t xHandle; configASSERT( uxMaxCount != 0 ); configASSERT( uxInitialCount <= uxMaxCount ); xHandle = xQueueGenericCreate( uxMaxCount, queueSEMAPHORE_QUEUE_ITEM_LENGTH, queueQUEUE_TYPE_COUNTING_SEMAPHORE ); if ( xHandle != NULL ) { ( ( Queue_t * ) xHandle )->uxMessagesWaiting = uxInitialCount; traceCREATE_COUNTING_SEMAPHORE(); } else { traceCREATE_COUNTING_SEMAPHORE_FAILED(); } configASSERT( xHandle ); return xHandle; }

优先级翻转

使用二值信号量的时候会遇到常见的问题——优先级翻转,优先级翻转在可剥夺内核中是非常常见的,在实时系统中不允许出现这种现场,这样会破坏任务的预期顺序。

常见场合描述如下:

当一个低优先级和一个高优先级任务同时使用同一个信号量,而系统中还有其他中等优先级任务时,如果低 优先级任务获得了信号量,那么高优先级的任务就会处于等待状态;但是,中等优先级的任务可以打断低优先级任务而先于高优先级任务运行(此时高优先级的任务在等待信号量,所以不能运行),这就出现了优先级翻转的现象。

互斥信号量

互斥信号量其实就是一个拥有优先级继承的二值信号量,在同步的应用中,二值信号量最适合。互斥信号量适合用于那些需要互斥访问的应用中。当一个互斥信号量正在被一个低优先级的任务使用,而此时有个高优先级的任务也尝试获取这个互斥信号量的话就会被阻塞。不过这个高优先级的任务会将低优先级任务的优先级提升到与自己相同的优先级,这个过程就是优先级继承。优先级继承尽可能地降低了高优先级任务处于阻塞态的时间,并且将已经出现的“优先级翻转“的影响降到最低。

函数

描述

xSemaphoreCreateMutex

使用动态方法创建互斥信号量

xSemaphoreCreateMutexStatic

使用静态方法创建互斥信号量

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 #define xSemaphoreCreateMutex() xQueueCreateMutex( queueQUEUE_TYPE_MUTEX ) QueueHandle_t xQueueCreateMutex ( const uint8_t ucQueueType ) Queue_t *pxNewQueue; const UBaseType_t uxMutexLength = ( UBaseType_t ) 1 , uxMutexSize = ( UBaseType_t ) 0 ; pxNewQueue = ( Queue_t * ) xQueueGenericCreate( uxMutexLength, uxMutexSize, ucQueueType ); prvInitialiseMutex( pxNewQueue ); return pxNewQueue; } static void prvInitialiseMutex ( Queue_t *pxNewQueue ) if ( pxNewQueue != NULL ) { pxNewQueue->pxMutexHolder = NULL ; pxNewQueue->uxQueueType = queueQUEUE_IS_MUTEX; pxNewQueue->u.uxRecursiveCallCount = 0 ; vPortCPUInitializeMutex(&pxNewQueue->mux); traceCREATE_MUTEX( pxNewQueue ); ( void ) xQueueGenericSend( pxNewQueue, NULL , ( TickType_t ) 0U , queueSEND_TO_BACK ); } else { traceCREATE_MUTEX_FAILED(); } } void vPortCPUInitializeMutex (portMUX_TYPE *mux) mux->owner=portMUX_FREE_VAL; mux->count=0 ; } typedef struct { uint32_t owner; uint32_t count; #ifdef CONFIG_FREERTOS_PORTMUX_DEBUG const char *lastLockedFn; int lastLockedLine; #endif } portMUX_TYPE;

递归互斥信号量

已经获取了互斥信号量的任务就不能再次获取这个互斥信号量,但是递归互斥信号量不同,已经获取了递归互斥信号量的任务可以再次获取这个递归互斥信号量,而且次数不限。递归互斥信号量也有优先级继承的机制,所以任务使用完递归互斥信号量以后一定要记得释放。

函数

描述

xSemaphoreCreateRecursiveMutex

使用动态方法创建递归互斥信号量

xSemaphoreCreateRecursiveMutexStatic

使用静态方法创建递归互斥信号量

xSemaphoreGiveRecursive

释放递归互斥信号量

xSemaphoreTakeRecursive

获取递归互斥信号量

FreeRTOS软件定时器

软件定时器的回调函数是在定时器服务任务中执行的,所以一定不能在回调函数中调用任何会阻塞任务的API函数,比如定时器回调函数中千万不能调用vTaskDelay、vTaskDelayUntil,还有一些访问队列或者信号量的非零阻塞时间的API函数也不能调用。FreeRTOS提供了很多定时器相关的API函数,这些API函数大多使用FreeRTOS的队列发送命令给定时器服务任务,这个队列叫定时器命令队列,是供给FreeRTOS的软件定时器使用的,用户不能直接访问。

函数

描述

xTimerReset()

复位软件定时器

xTimerResetFromISR()

复位软件定时器,用在中断服务函数中

xTimerCreate()

使用动态方法创建软件定时器

xTimerCreateStatic()

使用静态方法创建软件定时器

xTimerStart()

开启软件定时器,用于任务中

xTimerStartFromISR()

开启软件定时器,用于中断中

xTimerStop()

停止软件定时器,用于任务中

xTimerStopFromISR()

停止软件定时器,用于中断中

FreeRTOS事件标志组

使用信号量同步时任务只能与单个的事件或任务进行同步,有时候某个任务可能需要与多个事件或任务进行同步,此时信号量就无能为力了。FreeRTOS为此提供了一个可选的解决办法——事件标志组。事件标志组的数据类型为EventGroupHandle_t,当configUSE_16_BIT_TICKS为1的时候,则事件标志组可以存储8个事件位;当configUSE_16_BIT_TICKS为0的时候,则事件标志组存储24个事件位。事件标志组中的所有事件位都存储在一个无符号的EventBits_t类型的变量中

函数

描述

xEventGroupCreate()

使用动态方法创建事件标志组

xEventGroupCreateStatic()

使用静态方法创建事件标志组

xEventGroupClearBits()

将指定的事件位清零,用在任务中

xEventGroupClearBitsFromISR()

将指定的事件位清零,用在中断服务函数中

xEventGroupSetBits()

将指定的事件位置1,用在任务中

xEventGroupSetBitsFromISR()

将指定的事件位置1,用在中断服务函数中

xEventGroupGetBits()

获取当前事件标志组的值(各个事件的值),用在任务中

xEventGroupGetBitsFromISR()

获取当前事件标志组的值,用在中断服务函数中

xEventGroupWaitBits()

等待指定的事件位

FreeRTOS任务通知

FreeRTOS的每个任务都有一个32位的通知值,任务控制块中的成员变量ulNotifiedValue就是这个通知值。任务通知是一个事件,假如某个任务通知的接收任务因为等待任务通知而阻塞,则向这个接收任务发送任务通知以后就会解除这个任务的阻塞状态。也可以更新接收任务的任务通知值,可以通过以下方法更新接收任务的通知值:

不覆盖接收任务的通知值(如果上次发送给接收任务的通知还没被处理)

覆盖接收任务的通知值

更新接收任务的通知值的一个或多个bit

增加接收任务的通知值

合理使用上面这些更改任务通知值的方法可以在一些场合中替代队列、二值信号量、计数型信号量和事件标志组,并且可以提高速度,减少RAM的使用量。

任务通知的局限:

FreeRTOS的任务通知只能有一个接收任务,其实大多数的应用都是这种情况

接收任务可以因为接收任务通知而进入阻塞态,但是发送任务不会因为任务通知发送失败而阻塞

函数

描述

xTaskNotify

发送通知,带有通知值并且不保留接收任务原通知值,用在任务中

xTaskNotifyFromISR

发送通知,函数xTaskNotify的中断版本

xTaskNotifyGive

发送通知,不带通知值并且不保留接收任务的通知值,此函数会将接收任务的通知值加1,用于任务中

vTaskNotifyGiveFromISR

发送通知,函数xTaskNotifyGive的中断版本

xTaskNotifyAndQuery

发送通知,带有通知值并且保留接收任务的原通知值,用在任务中

xTaskNotifyAndQueryFromISR

发送通知,函数xTaskNotifyAndQuery的中断版本,用在中断服务函数中

ulTaskNotifyTake

获取任务通知,可以设置在退出此函数的时候将任务通知值清零或者减一。当任务通知用作二值信号量或者计数信号量的时候,使用此函数来获取信号量

xTaskNotifyWait

等待任务通知,比ulTaskNotifyTask更为强大,全功能版任务通知获取函数

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 BaseType_t xTaskNotify ( TaskHandle_t xTaskToNotify, uint32_t ulValue, eNotifyAction eAction ) TCB_t * pxTCB; eNotifyValue eOriginalNotifyState; BaseType_t xReturn = pdPASS; configASSERT( xTaskToNotify ); pxTCB = ( TCB_t * ) xTaskToNotify; taskENTER_CRITICAL(&xTaskQueueMutex); { eOriginalNotifyState = pxTCB->eNotifyState; pxTCB->eNotifyState = eNotified; switch ( eAction ) { case eSetBits: pxTCB->ulNotifiedValue |= ulValue; break ; case eIncrement: ( pxTCB->ulNotifiedValue )++; break ; case eSetValueWithOverwrite: pxTCB->ulNotifiedValue = ulValue; break ; case eSetValueWithoutOverwrite: if ( eOriginalNotifyState != eNotified ) { pxTCB->ulNotifiedValue = ulValue; } else { xReturn = pdFAIL; } break ; case eNoAction: break ; } if ( eOriginalNotifyState == eWaitingNotification ) { ( void ) uxListRemove( &( pxTCB->xGenericListItem ) ); prvAddTaskToReadyList( pxTCB ); configASSERT( listLIST_ITEM_CONTAINER( &( pxTCB->xEventListItem ) ) == NULL ); if ( tskCAN_RUN_HERE(pxTCB->xCoreID) && pxTCB->uxPriority > pxCurrentTCB[ xPortGetCoreID() ]->uxPriority ) { portYIELD_WITHIN_API(); } else if ( pxTCB->xCoreID != xPortGetCoreID() ) { taskYIELD_OTHER_CORE(pxTCB->xCoreID, pxTCB->uxPriority); } else { mtCOVERAGE_TEST_MARKER(); } } else { mtCOVERAGE_TEST_MARKER(); } } taskEXIT_CRITICAL(&xTaskQueueMutex); return xReturn; } uint32_t ulTaskNotifyTake( BaseType_t xClearCountOnExit, TickType_t xTicksToWait ){ TickType_t xTimeToWake; uint32_t ulReturn; taskENTER_CRITICAL(&xTaskQueueMutex); { if ( pxCurrentTCB[ xPortGetCoreID() ]->ulNotifiedValue == 0U L ) { pxCurrentTCB[ xPortGetCoreID() ]->eNotifyState = eWaitingNotification; if ( xTicksToWait > ( TickType_t ) 0 ) { if ( uxListRemove( &( pxCurrentTCB[ xPortGetCoreID() ]->xGenericListItem ) ) == ( UBaseType_t ) 0 ) { portRESET_READY_PRIORITY( pxCurrentTCB[ xPortGetCoreID() ]->uxPriority, uxTopReadyPriority ); } else { mtCOVERAGE_TEST_MARKER(); } #if ( INCLUDE_vTaskSuspend == 1 ) { if ( xTicksToWait == portMAX_DELAY ) { traceMOVED_TASK_TO_SUSPENDED_LIST(pxCurrentTCB); vListInsertEnd( &xSuspendedTaskList, &( pxCurrentTCB[ xPortGetCoreID() ]->xGenericListItem ) ); } else { xTimeToWake = xTickCount + xTicksToWait; prvAddCurrentTaskToDelayedList( xPortGetCoreID(), xTimeToWake ); } } #else { xTimeToWake = xTickCount + xTicksToWait; prvAddCurrentTaskToDelayedList( xTimeToWake ); } #endif portYIELD_WITHIN_API(); } else { mtCOVERAGE_TEST_MARKER(); } } else { mtCOVERAGE_TEST_MARKER(); } } taskEXIT_CRITICAL(&xTaskQueueMutex); taskENTER_CRITICAL(&xTaskQueueMutex); { ulReturn = pxCurrentTCB[ xPortGetCoreID() ]->ulNotifiedValue; if ( ulReturn != 0U L ) { if ( xClearCountOnExit != pdFALSE ) { pxCurrentTCB[ xPortGetCoreID() ]->ulNotifiedValue = 0U L; } else { ( pxCurrentTCB[ xPortGetCoreID() ]->ulNotifiedValue )--; } } else { mtCOVERAGE_TEST_MARKER(); } pxCurrentTCB[ xPortGetCoreID() ]->eNotifyState = eNotWaitingNotification; } taskEXIT_CRITICAL(&xTaskQueueMutex); return ulReturn; }

FreeRTOS中的空闲任务

空闲任务不仅仅是为了满足任务调度器启动以后至少有一个任务运行而创建的,空闲任务中还会去做一些其他的事情,如下:

判断系统中是否有任务删除自己,如果有,则在空闲任务中释放被删除任务的任务堆栈和任务控制块的内存

运行用户设置的空闲任务钩子函数

判断是否开启低功耗tickless模式,如果开启,则还需要做相应的处理

用户可以创建与空闲任务优先级相同的应用任务,当宏configIDLE_SHOULD_YIELD为1时,空闲任务会让出时间片给相同优先级的应用任务。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 static portTASK_FUNCTION ( prvIdleTask, pvParameters ) ( void ) pvParameters; for ( ;; ) { prvCheckTasksWaitingTermination(); #if ( configUSE_PREEMPTION == 0 ) { taskYIELD(); } #endif #if ( ( configUSE_PREEMPTION == 1 ) && ( configIDLE_SHOULD_YIELD == 1 ) ) { if ( listCURRENT_LIST_LENGTH( &( pxReadyTasksLists[ tskIDLE_PRIORITY ] ) ) > ( UBaseType_t ) 1 ) { taskYIELD(); } else { mtCOVERAGE_TEST_MARKER(); } } #endif #if ( configUSE_IDLE_HOOK == 1 ) { extern void vApplicationIdleHook ( void ) vApplicationIdleHook(); } #endif { esp_vApplicationIdleHook(); } #if ( configUSE_TICKLESS_IDLE != 0 ) { TickType_t xExpectedIdleTime; BaseType_t xEnteredSleep = pdFALSE; xExpectedIdleTime = prvGetExpectedIdleTime(); if ( xExpectedIdleTime >= configEXPECTED_IDLE_TIME_BEFORE_SLEEP ) { taskENTER_CRITICAL(&xTaskQueueMutex); { configASSERT( xNextTaskUnblockTime >= xTickCount ); xExpectedIdleTime = prvGetExpectedIdleTime(); if ( xExpectedIdleTime >= configEXPECTED_IDLE_TIME_BEFORE_SLEEP ) { traceLOW_POWER_IDLE_BEGIN(); xEnteredSleep = portSUPPRESS_TICKS_AND_SLEEP( xExpectedIdleTime ); traceLOW_POWER_IDLE_END(); } else { mtCOVERAGE_TEST_MARKER(); } } taskEXIT_CRITICAL(&xTaskQueueMutex); } else { mtCOVERAGE_TEST_MARKER(); } if ( !xEnteredSleep ) { esp_vApplicationWaitiHook(); } } #else esp_vApplicationWaitiHook(); #endif } }

FreeRTOS低功耗Tickless模式

当处理器进入空闲任务周期以后就会关闭系统节拍中断(滴答定时器中断),只有其他中断发生或者其他任务需要处理的时候,处理器才会被从低功耗模式中唤醒。为此将会面临两大问题:

关闭系统节拍中断会导致系统节拍计数器停止,系统时钟就会停止。

我们需要记录下系统节拍中断的关闭时间,当系统节拍中断再次开启运行的时候补上这段时间即可,这时候就需要使用定时器来记录这段该补上的时间

如何保证下一个要运行的任务能被准确地唤醒?

处理器在进入低功耗模式之前获取还有多长时间运行下一个任务,开启定时器,定时周期设置为这个时间,定时时间到了则产生定时中断,处理器就从低功耗模式唤醒了

Tickless的具体实现

要想使用Tickless模式,则必须首先将FreeRTOSConfig.h中的宏configUSE_TICKLESS_IDLE设置为1

使能Tickless模式以后,当下面两种情况都出现的时候,FreeRTOS内核就会调用宏portSUPPRESS_TICKS_AND_SLEEP来处理低功耗相关的工作:

空闲任务是当前唯一可运行的任务,因为其他所有的任务都处于阻塞或者挂起态

系统处于低功耗模式的时间至少大于configEXPECTED_IDLE_TIME_BEFORE_SLEEP个时钟节拍

处理器工作在低功耗模式的时间虽说没有任何限制,一个时钟节拍也行、滴答定时器所能计时的最大值也行,但是时间太短意义也不大,所以必须对工作在低功耗模式的时间做个限制,不能太短了,宏config EXPECTED_IDLE_TIME_BEFORE_SLEEP就是用来实现这个功能的

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 #define portSUPPRESS_TICKS_AND_SLEEP( idleTime ) vApplicationSleep( idleTime ) bool IRAM_ATTR vApplicationSleep ( TickType_t xExpectedIdleTime ) bool result = false ; portENTER_CRITICAL(&s_switch_lock); if (s_mode == PM_MODE_LIGHT_SLEEP && !s_is_switching) { int64_t next_esp_timer_alarm = esp_timer_get_next_alarm(); int64_t now = esp_timer_get_time(); int64_t time_until_next_alarm = next_esp_timer_alarm - now; int64_t wakeup_delay_us = portTICK_PERIOD_MS * 1000L L * xExpectedIdleTime; int64_t sleep_time_us = MIN(wakeup_delay_us, time_until_next_alarm); if (sleep_time_us >= configEXPECTED_IDLE_TIME_BEFORE_SLEEP * portTICK_PERIOD_MS * 1000L L) { esp_sleep_enable_timer_wakeup(sleep_time_us - LIGHT_SLEEP_EARLY_WAKEUP_US); #ifdef CONFIG_PM_TRACE esp_sleep_pd_config(ESP_PD_DOMAIN_RTC_PERIPH, ESP_PD_OPTION_ON); #endif int core_id = xPortGetCoreID(); ESP_PM_TRACE_ENTER(SLEEP, core_id); int64_t sleep_start = esp_timer_get_time(); esp_light_sleep_start(); int64_t slept_us = esp_timer_get_time() - sleep_start; ESP_PM_TRACE_EXIT(SLEEP, core_id); uint32_t slept_ticks = slept_us / (portTICK_PERIOD_MS * 1000L L); if (slept_ticks > 0 ) { vTaskStepTick(slept_ticks); XTHAL_SET_CCOUNT(XTHAL_GET_CCOMPARE(XT_TIMER_INDEX) - 16 ); while (!(XTHAL_GET_INTERRUPT() & BIT(XT_TIMER_INTNUM))) { ; } } result = true ; } } portEXIT_CRITICAL(&s_switch_lock); return result; }

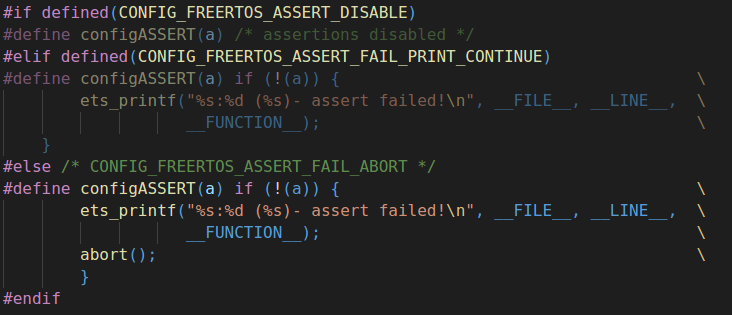

configASSERT设置

configASSERT设置 PEB4SS.png

PEB4SS.png PEsHwd.png

PEsHwd.png PEyFkn.png

PEyFkn.png PEyUne.png

PEyUne.png PEv1LF.png

PEv1LF.png PExpTJ.png

PExpTJ.png